This post describes NoSQL Cassandra database solution with focus on data modelling aspect.

Cassandra competes in the space of NoSQL Column Family Storage engine, named under various terms like Wide Column Store or BigTable's storage in reference to the influential Google BigTable paper. Competing candidate includes the HBase engine which is a Java open source implementation of the BigTable spec. Note: I do not use the term Columnstore or Columnar database as these usually imply column-oriented DBMS like MonetDB or Vertica whose goals is to change RDBMS data storage from row-based to column based.

In general, Column Family solutions do not fit into the 3 common categories defined in NoSQL:

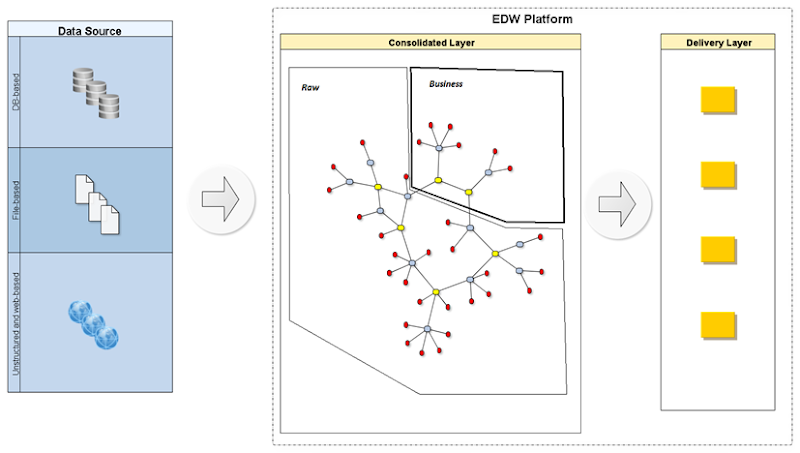

Column Family category is designed to focus on storing and processing large amount of structure and semi-structure of data in a distributed and non-relational way. In this sense, they could become an alternative or complementary solution within the data warehousing industry.

I will not go through a detail description of Cassandra Storage engine (C*), so anyone interested can find plenty of online resource, discussion and other blog around (...open source should imply open community). I will rather focus on its impact and support for structural data modelling.

The first section gives some general aspect of Column Family engine, followed by various C* data modelling structure and design criteria, and finally I'll comment on how it could be a good fit (or not) to the data warehousing and BI world.

or sales pitch, as if data modelling has now become just a burden. We should always be wary of such simplified conclusion and in terms of modelling, whatever you model upfront will be gained down the line. A complete dynamic schema-less (unknown) model can not be a sustainable and long living model, especially for mostly read-only application such as Business Intelligence and Data warehousing!

Although Column Family platforms surfed on the NoSQL movement (fill in your own definition), they do require and benefit from some level of a priori data modelling. But modelling should not be approached from the same angle as traditional relational database. The major variations originate from these key characteristics:

Keyspace -->n Columns Family --->n Row --->n ColName-ColValue-Timestpamp

Keyspace delimits your application boundary (think of Database or schema in DBMS world) and will store all related set of Column Family onto the cluster. Typical Cluster would have one Keyspace per application, and important global property are defined at this level (ex. replication factor).

Columns Family is the equivalent of a relation Table, so it contains columns and rows for storing data pertaining to the entity we are keeping track of.

Each Row is associated with one instance of this entity stored which is identified through a mandatory and unique row-key attribute. The most atomic storage unit is actually the triplet: ColumnName--ColumnValue--Timestamp, so that each row may include any number of these triplets. The timestamp is automatically generated and is internal to C* for managing data versioning and conflict resolution.

Let's loook at some data modelling design common to C*.

Row-Key | Columns

| Email Address Phone Status

| --------------------------------------------------

username1 | u@com.com address1 13332 Married

|

| Email Address Phone Status

| --------------------------------------------------

username2 | u@edu.edu address2 13333 Single

|

Note that all columns are optional, and may simply be ignored as opposed to being explicitly set as Nullable for handling missing values (as found in relational DB). In fact more importantly, the Schema-less nature means that literally all columns from row to row could change arbitrarily. Although, we would want to have some form of stability in defining column in Column Family, in order to get some form of predictability in data access pattern.

Row-Key | Columns

| Date1-12:32:24 Date2-12:33:56 Date2-13:01:32

| --------------------------------------------------

device1 | low low high

|

| Date8-17:04:24 Date8-18:33:55 Date8-18:41:32

| --------------------------------------------------

device2 | low medium high

|

Here each Column Name is defined by the recording time-stamp and the structure will grow dynamically to accommodate all upcoming recording. Although up to 2 billion columns are possible, we would limit this growing factor (for efficiency reason) by Bucketing the row-key by appending temporal data into row-key (ex. 'deviceID-day' or 'deviceID-YearMth', etc) so that one row will only store recordings for a limited time span.

More options are possible, if we wanted to sort the column time descending so that we keep the last 15 measurement points, or yet preserve only a limited number of measurement with the use of TTL (time to leave) property to automatically expire oldest column values.

Taking the previous username data, we could exploit these to store the many email addresses or physical address associated to a single user:

Row-Key | Columns

| Emails Addresses

| ---------------------------------------------------------

username1 | {u@com.com,u2@..} {Home: address1, Work: addr2 ..}

|

| Emails Addresses

| --------------------------------------------------------------

username2 | {u@edu.edu, {Home: addressHome, Work: addressW2

| m@m.com, perso@p.ch} Home2: AddrHome2}

|

C* offers rich and powerful data modelling structure but one has to keep these criteria in mind. This requires a shift in thinking in design principles for anyone coming from RDBMS world. In summary, well be doing more Data Denormalisation and Aggregation in order to:

It follows that C* may not be so suited for BI. For example consider the No Join limitation! BI tradition applications strive for supporting many ad-hoc data access paths implying joining data all over the place. The entire Star Schema design and the multidimensional counter part were created to guarantee good performance and scale no matter how many dimensions you'd want your metrics against.

Eliminating the possibility of Join mean to denormalize the entire fact/dimension tables structure into their constituents: all dimension hierarchical attributes flatten out alongside the metrics. And you'd still be limited to do key look-up query-type (or at best key range lookup). However what we need is a capacity for doing Set or Bulk read operations, involving full scan of data in order to calculate aggregate KPI or metrics!

As of now, we can see that C* is mostly an application-driven data management and storage solution targeting real time operation application (high availability and low latency). Taken from DataStax FAQ website, here are its supposedly main use cases :

I can clearly see a good use-case in the storage of real time data collected by sensors or devices, i.e. time series data. These are expected to explode with the advent of the internet of things. C* could certainly be a viable platform to help us meet the Velocity and Volumetry requirement, the famous 2 V's of the not least-famous Big Data buzz. But from earlier points, I'd say its data analytical potential would be limited to basic metric calculation like moving average, min/max and other simple data analysis. These assume data processing happening at row level as opposed to across rows! For any analytical needs crossing/joining over many dimension, you'll need to export your data to other more specialised analytical platform!

In this sense, I see C* as offering a versatile and accommodating platform to deal with the dynamic nature of columns. We can easily expand our KPI's list to the thousands or more, seemingly and elegantly! We could choose different physical design for the row grain definition, by choosing different level for the row-key :

1- row-key = customerID (columns = PeriodId_kpiInd, ...)

and keep only a few years worth of data with TTL

Row-Key | Columns

| 2014-01:Kpi1 2014-01:Kpi2 ... 2014-02:Kpi1 ....

| ---------------------------------------------------------

customer1 | 1234.76 32 ... 1657.43 ....

|

| 2014-01:Kpi1 2014-01:Kpi2 ... 2014-02:Kpi1 ....

| ---------------------------------------------------------

customer2 | 1234.76 32 ... 1657.43 ....

|

That way, we have all KPIs belonging to one customer stored in a single wide row, and leverage sequential read for doing YTD or Year-to-Year comparison calculation. We could also leverage the TTL attribute to only keep a limited number of years of historical data, and let C* manages the purging automatically.

2- row-key = customerID-PeriodId (columns = kpiInd, ...)

Row-Key | Columns

| Kpi1 Kpi2 ... KpiN ...

| ----------------------------------------

c1:2014-01 | 1234.76 32 ... 1656557.43 ...

|

| Kpi1 Kpi2 ... KpiN ...

| ----------------------------------------

c1:2014-02 | 1657.43 37 1657921.22 ...

|

Here, we'd end-up with much narrower row but would require crossing different row-keys to do customer longitudinal analysis like Year-to-Year or other KPIs comparison throughout customer lifetime.

One could think of alternative way of storing these flatten KPIs/metrics, but again, this will not be suited and ideal to do set-based and cross entities pattern analysis. Rather it should be thought of as a flexible storage mechanisms with potential and limited fine-grain analysis or one-one entity comparison.

In summary, I see a few cases where we could make use of C* for providing BI-related functionalities, however it is surely not a suitable storage solution when one is looking for a generic and global platform!

Martin

Cassandra competes in the space of NoSQL Column Family Storage engine, named under various terms like Wide Column Store or BigTable's storage in reference to the influential Google BigTable paper. Competing candidate includes the HBase engine which is a Java open source implementation of the BigTable spec. Note: I do not use the term Columnstore or Columnar database as these usually imply column-oriented DBMS like MonetDB or Vertica whose goals is to change RDBMS data storage from row-based to column based.

In general, Column Family solutions do not fit into the 3 common categories defined in NoSQL:

- KeyValue Store: Column Family does not simply store a single value per Key but rather hold many columns to handle wide data along these columns. (note K-V Store could also hold composite values but these would be kept and treated as a single object)

- Document Store: documents are usually stored using any open (as in visible) text format (JSON is the usual approach) and using web-friendly language like javascript and RESTful interface.

- Graph Database: a complete different paradigm is used here following the principles of Graph theory.

Column Family category is designed to focus on storing and processing large amount of structure and semi-structure of data in a distributed and non-relational way. In this sense, they could become an alternative or complementary solution within the data warehousing industry.

I will not go through a detail description of Cassandra Storage engine (C*), so anyone interested can find plenty of online resource, discussion and other blog around (...open source should imply open community). I will rather focus on its impact and support for structural data modelling.

The first section gives some general aspect of Column Family engine, followed by various C* data modelling structure and design criteria, and finally I'll comment on how it could be a good fit (or not) to the data warehousing and BI world.

Column Family Design highlights

NoSQL community gains attention with its- Data's row storage assumes unique Row-key

- Row-key is central to the data storage and access.. much like any K-V Storage engine

- Row-key acts like a Primary key, but without any global constraint checks enabled (share nothing requirement)

- Row-key is central to horizontal scalability obtained through the use of data Partitioning (Sharding) among all distributed nodes within a cluster. This is typically done by applying some form of hashing (ex. MD5) on the row-key.

- Row-key can be of any type, including composite, as long as it can be hashed deterministically

- Row-key is the main predicate where Query is happening (or key range)

- Schema is much more dynamic

- Forget about having to define a fix set of columns with static type and length

- You can still define columns for your table, but these are not mandatory for all row

- Collection type for a column is possible (a similar feature is found in Postgres)

- Columns can be completely arbitrary and set dynamically by application (column value and name)

- Joins are not implemented by the Storage engine

- Joining data across Table (i.e. Column Family) will require involvement of application/client layer

- Much data denormalization and increase of redundancy are usually needed to satisfy different data access path.

- Data model is the result from data access pattern analysis aiming to avoid any join between Column Family (the whole data graph is available directly from the set of columns stored along each row).

- Columns have richer and new semantics

- Columns no longer viewed only as features or attributes pertaining to each row or entity instance

- Columns can also be viewed as data instance or values, similar to when we pivot rows into columns in a relational query

- Columns can grow quite extensively ... the upper limit will probably be dictated by performance or other constraint found in your application.

Cassandra Data Architecture

At high level, C* data architecture is decomposed into these concepts:Keyspace -->n Columns Family --->n Row --->n ColName-ColValue-Timestpamp

Keyspace delimits your application boundary (think of Database or schema in DBMS world) and will store all related set of Column Family onto the cluster. Typical Cluster would have one Keyspace per application, and important global property are defined at this level (ex. replication factor).

Columns Family is the equivalent of a relation Table, so it contains columns and rows for storing data pertaining to the entity we are keeping track of.

Each Row is associated with one instance of this entity stored which is identified through a mandatory and unique row-key attribute. The most atomic storage unit is actually the triplet: ColumnName--ColumnValue--Timestamp, so that each row may include any number of these triplets. The timestamp is automatically generated and is internal to C* for managing data versioning and conflict resolution.

Let's loook at some data modelling design common to C*.

1-Simple Case:

The easiest use-case is to define a "static" set of columns within one Column-Family:Row-Key | Columns

| Email Address Phone Status

| --------------------------------------------------

username1 | u@com.com address1 13332 Married

|

| Email Address Phone Status

| --------------------------------------------------

username2 | u@edu.edu address2 13333 Single

|

| Email Address Status

| --------------------------------------

username3 | u3@com.com address3 Married

|

| Email Address

| --------------------------

username4 | u4@edu.edu address4

|

| --------------------------------------

username3 | u3@com.com address3 Married

|

| Email Address

| --------------------------

username4 | u4@edu.edu address4

|

2-Wide-row Case:

Another structure is what is known as wide-row. This is a pattern well adapted for time series data. Let's say we are recording, for a number of devices, values at fixed time point ... we may choose to store each device as one row, and generate columns dynamically into this wide-row:Row-Key | Columns

| Date1-12:32:24 Date2-12:33:56 Date2-13:01:32

| --------------------------------------------------

device1 | low low high

|

| Date8-17:04:24 Date8-18:33:55 Date8-18:41:32

| --------------------------------------------------

device2 | low medium high

|

Here each Column Name is defined by the recording time-stamp and the structure will grow dynamically to accommodate all upcoming recording. Although up to 2 billion columns are possible, we would limit this growing factor (for efficiency reason) by Bucketing the row-key by appending temporal data into row-key (ex. 'deviceID-day' or 'deviceID-YearMth', etc) so that one row will only store recordings for a limited time span.

More options are possible, if we wanted to sort the column time descending so that we keep the last 15 measurement points, or yet preserve only a limited number of measurement with the use of TTL (time to leave) property to automatically expire oldest column values.

3-Dynamic-column Case:

We can make things even more dynamic by leveraging Cassandra's usage of arbitrary and application-supplied column name to store data. In this case, we may not even need to store any value, as the column name become the value itself...

As an example, let's assume we have some user that subscribe to named service, so that we wish to keep all their subscribed service-name directly as column:

As an example, let's assume we have some user that subscribe to named service, so that we wish to keep all their subscribed service-name directly as column:

Row-Key | Columns

| service-1 service-Z service-tt

| --------------------------------

username1 |

|

| serviceEE serviceRR service-tt service-A

| ----------------------------------------------

username2 |

|

| service-1 service-Z service-tt

| --------------------------------

username1 |

|

| serviceEE serviceRR service-tt service-A

| ----------------------------------------------

username2 |

|

| service-AAA

| -----------

username3 |

|

| serviceA serviceE

| -------------------

username4 |

|

| -----------

username3 |

|

| serviceA serviceE

| -------------------

username4 |

|

No structure is given at creation time and the application provides this dynamically during runtime. This is sometimes referred to as Dynamic Column Family Design.

4-Collection-based column Case:

The one-to-many association between one row-key does not necessarily imply the creation of multiple columns for that row. One may leverage the concept of C* collection data types. This is equivalent to collection type supported in most programming language (ex. Set, List and Map).Taking the previous username data, we could exploit these to store the many email addresses or physical address associated to a single user:

Row-Key | Columns

| Emails Addresses

| ---------------------------------------------------------

username1 | {u@com.com,u2@..} {Home: address1, Work: addr2 ..}

|

| Emails Addresses

| --------------------------------------------------------------

username2 | {u@edu.edu, {Home: addressHome, Work: addressW2

| m@m.com, perso@p.ch} Home2: AddrHome2}

|

| Emails Addresses

| ---------------------------------------

username3 | {u3@com.com} {Home:address3}

|

| ---------------------------------------

username3 | {u3@com.com} {Home:address3}

|

Data model design criteria

To be able to produce useful data model, one needs a minimum of knowledge on how data is stored inside a C* cluster. Important aspect has to do with clustering. Clustering is completely symmetrical, i.e. all nodes participating in the cluster have identical characteristics: Shared Nothing, independent, identical responsibilities, self sufficient (can be added/removed on demands) with no single point of failure or contention.

Another important point is that rows identifiable by their unique row-key will always land on same node (aside replication consideration resulting in copies stored in other nodes). The full row can then be read sequentially from file data block within a single node such that random read/write can be often avoided. With that in mind, here are some criteria important for performance:

Another important point is that rows identifiable by their unique row-key will always land on same node (aside replication consideration resulting in copies stored in other nodes). The full row can then be read sequentially from file data block within a single node such that random read/write can be often avoided. With that in mind, here are some criteria important for performance:

- Accessing data by row-key will be extremely fast (data partition with key hashing can be viewed as implicit index).

- Store as much data into single Row so that the query/access pattern will involve a single logical sequential read.

- Data modelling must be aligned with data access pattern which becomes the driving factor for Data modeling!

- Denormalization becomes the norm as each data access path may involve the creation of new (redundant) Column Family.

- Design criteria has more to do read/write operations done on data than coming up with clear semantic for entity.

- Joining two Column Family is simply not possible. We must fallback to client application logic, and thus likely to be avoided in most cases. By extension, neither does C* support Sub Queries.

- Avoid set operations or bulk read/write where a large number of rows are targeted

C* offers rich and powerful data modelling structure but one has to keep these criteria in mind. This requires a shift in thinking in design principles for anyone coming from RDBMS world. In summary, well be doing more Data Denormalisation and Aggregation in order to:

- Reduce Query-I/O data transfer volume

- (keep query and processing local!)

- Reduce Query-Processing complexity (no JOINS)

- (distributed system are inherently more complex)

- Increasing total data volume storage (disk storage is cheap)

Any good use case for BI?

Eliminating the possibility of Join mean to denormalize the entire fact/dimension tables structure into their constituents: all dimension hierarchical attributes flatten out alongside the metrics. And you'd still be limited to do key look-up query-type (or at best key range lookup). However what we need is a capacity for doing Set or Bulk read operations, involving full scan of data in order to calculate aggregate KPI or metrics!

As of now, we can see that C* is mostly an application-driven data management and storage solution targeting real time operation application (high availability and low latency). Taken from DataStax FAQ website, here are its supposedly main use cases :

- Time series data management

- High-velocity device data ingestion and analysis

- Media streaming (e.g., music, movies)

- Social media input and analysis

- Online web retail (e.g., shopping carts, user transactions)

- Web log management / analysis

- Web click-stream analysis

- Real-time data analytics

- Online gaming (e.g., real-time messaging)

- Write-intensive transaction systems

- Buyer event analytics

- Risk analysis and management

I can clearly see a good use-case in the storage of real time data collected by sensors or devices, i.e. time series data. These are expected to explode with the advent of the internet of things. C* could certainly be a viable platform to help us meet the Velocity and Volumetry requirement, the famous 2 V's of the not least-famous Big Data buzz. But from earlier points, I'd say its data analytical potential would be limited to basic metric calculation like moving average, min/max and other simple data analysis. These assume data processing happening at row level as opposed to across rows! For any analytical needs crossing/joining over many dimension, you'll need to export your data to other more specialised analytical platform!

Another use case is possible if we leverage the wide row and dynamic columns aspects. It is frequent in BI that we need to compute and preserve a large number of metrics and KPI, per business entity, and calculated over some period. For example, the customer centric view (typical of CRM) need large number of metrics measuring historical behavior/performance at each customer. Or product portfolio analysis monitoring key indicators of rentability and popularity per product. These applications involves flatten and dynamic data, AKA KPI/metrics flat structure. Any RDBMS implementations will be limited in one form or another due to its static and fix nature of its data schema. One can use generic name columns, but guessing which data types will be needed in the future can be challenging. One could also build some metadata-driven engine to take care of creation/deleting metrics on-demand, but this will pose issues with timeline and historical data and storage.

In this sense, I see C* as offering a versatile and accommodating platform to deal with the dynamic nature of columns. We can easily expand our KPI's list to the thousands or more, seemingly and elegantly! We could choose different physical design for the row grain definition, by choosing different level for the row-key :

1- row-key = customerID (columns = PeriodId_kpiInd, ...)

and keep only a few years worth of data with TTL

Row-Key | Columns

| 2014-01:Kpi1 2014-01:Kpi2 ... 2014-02:Kpi1 ....

| ---------------------------------------------------------

customer1 | 1234.76 32 ... 1657.43 ....

|

| 2014-01:Kpi1 2014-01:Kpi2 ... 2014-02:Kpi1 ....

| ---------------------------------------------------------

customer2 | 1234.76 32 ... 1657.43 ....

|

That way, we have all KPIs belonging to one customer stored in a single wide row, and leverage sequential read for doing YTD or Year-to-Year comparison calculation. We could also leverage the TTL attribute to only keep a limited number of years of historical data, and let C* manages the purging automatically.

2- row-key = customerID-PeriodId (columns = kpiInd, ...)

Row-Key | Columns

| Kpi1 Kpi2 ... KpiN ...

| ----------------------------------------

c1:2014-01 | 1234.76 32 ... 1656557.43 ...

|

| Kpi1 Kpi2 ... KpiN ...

| ----------------------------------------

c1:2014-02 | 1657.43 37 1657921.22 ...

|

Here, we'd end-up with much narrower row but would require crossing different row-keys to do customer longitudinal analysis like Year-to-Year or other KPIs comparison throughout customer lifetime.

One could think of alternative way of storing these flatten KPIs/metrics, but again, this will not be suited and ideal to do set-based and cross entities pattern analysis. Rather it should be thought of as a flexible storage mechanisms with potential and limited fine-grain analysis or one-one entity comparison.

In summary, I see a few cases where we could make use of C* for providing BI-related functionalities, however it is surely not a suitable storage solution when one is looking for a generic and global platform!